Crypto.BI Toolbox comes with a raw .dat block file to MySQL insertion tool called blocks_inserter.

To use it, first you’ll need to generate the MySQL schema on your local server by importing scripts/sql/mysql/cryptobitoolbox_bitcoin.sql into your MySQL server.

Then you will need to configure Toolbox with the appropriate username, password, host and database so that Toolbox can access your MySQL server.

Run the toolbox/db/test_connection program to test your MySQL connectivity. If the program returns a success message and server version, then you’ve got the system set up correctly. Otherwise you’ll likely get an exception and abnormal program termination. If you do, then please refer to the configuration documentation.

If you’ve got everyting set up correctly, now you can run the blockchain to MySQL import tool:

$ ./toolbox/db/blocks_inserter

Depending on your hardware capabilities, each block file may take several minutes to get fully processed.

Keep in mind that there are currently over 1800 block data files. In a reasonably powerful modern PC this process might take several days.

Note: This is a heavy IO-bound process that will take a toll on your hard drives, especially if you’re running blocks_inserter on the same machine as your MySQL server.

Reserve at least 1 terabyte of free space on your database server data partition for the full blockchain.

Interruptions

If the program is interrupted and run again at a later time there are some things you should be aware of.

First of all, the insertion does NOT look like this:

if (!inserted(block or tx or ...)) {

insert(block or tx or ...);

}This would make the insertion process painfully slow, especially at the most nested levels (transactions, inputs and outputs).

Instead, the program tries to find the last inserted block file, the last block, the last transaction (TX) and inside the TX the last inputs and the last outputs – in this order. And then it tries to restart from there.

So, it finds the last processed block data file and restarts. Then you may see messages like these:

[2019-11-23 16:35:58.006] [stderr] [info] Error inserting block 000000000000015a13e8efa95791e183d11513d82cf32c6ccb5a8ce913023619

[2019-11-23 16:35:58.006] [stderr] [info] Duplicate entry 'xE8x8FgxE4Ytx96x98x80xA7Ux99l^x08x81x8CxAAxB3Tx1A+' for key 'hash_merkle_root'

[2019-11-23 16:35:58.069] [stderr] [info] Error inserting block 0000000000000105a091184edde7b17b96869992b9391803b8ca1dbee33f5c57

[2019-11-23 16:35:58.069] [stderr] [info] Duplicate entry '=xB0IxABx86+x86rxB9x91x89Zx7FxECzxC9x82x7FxFD5xF8' for key 'hash_merkle_root'

[2019-11-23 16:35:58.254] [stderr] [info] Error inserting block 000000000000007f8626a95609e54aebbea1c454ae066d57af9777357bfe9073

[2019-11-23 16:35:58.254] [stderr] [info] Duplicate entry 'x81xDCxC0xE9ixA2xCExAFx12xB0x7Fx0BNxE6EP/x03xA6vx' for key 'hash_merkle_root'

[2019-11-23 16:35:58.330] [stderr] [info] Error inserting block 000000000000015af92e0eb8a0761c81704711eff9380aa18f40c270daba4f9d

[2019-11-23 16:35:58.330] [stderr] [info] Duplicate entry 'x+FoxBDxDCxC5xFEx85xD3xD6xCEx80xDDzx02(xBEx96x93x' for key 'hash_merkle_root'These are OK if you see them after a restart and if one of the data files wasn’t completely processed (almost always the case).

It’s also OK to get duplicate TX hash notices on the blocks before BIP30 activation when some TX’s were mined in multiple blocks. In this case their hash would collide with previous hashes.

The reason for these messages right after a restart is because of what we explained above: the program restarts from point X and moves forward without checking each insert. So it might restart a few blocks before the last inserted block, generating duplicate notices. Same for TX’s, inputs and outputs.

It’s more efficient to let these errors happen and move on than to check each individual insert. Errors are the exception, inserts are the rule. If every insert were verified it’d make the process too slow.

On the other hand, if you’re seeing theses kinds of messages at random times during the insert, long after a restart and past the BIP30 fix, then it might indicate some other issue with your block data files.

Multithreaded Mode

blocks_insertercan be run in multithreaded mode.

Since Bitcoin does not prescribe that blocks should be stored in order on disk, we can process several block data files at once and the database will preserve integrity.

You can activate multiple threads by passing the threaded and threads command line parameters:

./toolbox/db/blocks_inserter --threaded 1 --threads 8

The --threaded 1 argument turns on multithreading and --threads 8 tells blocks_inserter to run 8 threads.

If --threadsis passed without --threaded then it will be ignored and the process will run in single thread mode.

Running in multithreaded mode will usually speed up the process greatly, but keep in mind this is a IO-bound process which will be limited by the block file read and database write speeds. If maximum IO through put is reached with 1 process, running 2 threads will likely not yield improvement.

Multithreaded operation might process files out of order. See the following example output:

% ./toolbox/db/blocks_inserter --threaded 1 --threads 8

[2019-11-25 12:27:35.243] [console] [info] Starting blocks inserter

[2019-11-25 12:27:37.324] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00007.dat IN 2.08143s 1 FILES DONE

[2019-11-25 12:27:37.609] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00006.dat IN 0.285143s 2 FILES DONE

[2019-11-25 12:27:37.723] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00003.dat IN 0.113904s 3 FILES DONE

[2019-11-25 12:27:37.766] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00005.dat IN 0.0422754s 4 FILES DONE

[2019-11-25 12:27:37.792] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00002.dat IN 0.0260299s 5 FILES DONE

[2019-11-25 12:27:37.859] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00001.dat IN 0.067636s 6 FILES DONE

[2019-11-25 12:27:37.901] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00004.dat IN 0.0416559s 7 FILES DONE

[2019-11-25 12:27:39.512] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00008.dat IN 1.61126s 8 FILES DONENote how file number 7 was finished before files 3,2,1 and so on. This is OK.

Insert Rate

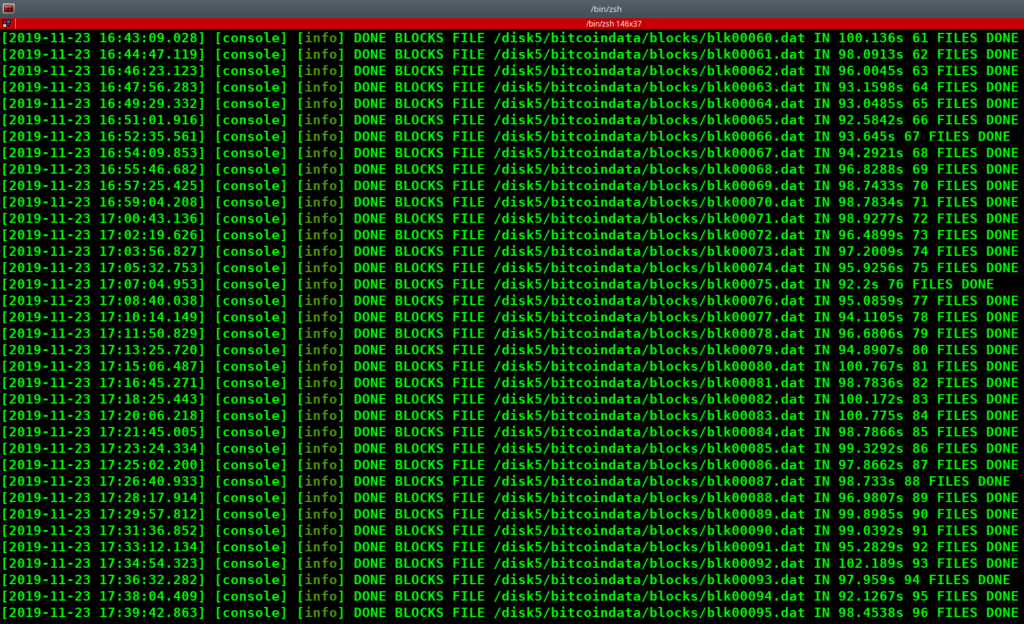

A normal process screen should look like this:

[2019-11-23 17:05:32.753] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00074.dat IN 95.9256s 75 FILES DONE

[2019-11-23 17:07:04.953] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00075.dat IN 92.2s 76 FILES DONE

[2019-11-23 17:08:40.038] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00076.dat IN 95.0859s 77 FILES DONE

[2019-11-23 17:10:14.149] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00077.dat IN 94.1105s 78 FILES DONE

[2019-11-23 17:11:50.829] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00078.dat IN 96.6806s 79 FILES DONE

[2019-11-23 17:13:25.720] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00079.dat IN 94.8907s 80 FILES DONE

[2019-11-23 17:15:06.487] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00080.dat IN 100.767s 81 FILES DONE

[2019-11-23 17:16:45.271] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00081.dat IN 98.7836s 82 FILES DONE

[2019-11-23 17:18:25.443] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00082.dat IN 100.172s 83 FILES DONE

[2019-11-23 17:20:06.218] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00083.dat IN 100.775s 84 FILES DONE

[2019-11-23 17:21:45.005] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00084.dat IN 98.7866s 85 FILES DONE

[2019-11-23 17:23:24.334] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00085.dat IN 99.3292s 86 FILES DONE

[2019-11-23 17:25:02.200] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00086.dat IN 97.8662s 87 FILES DONE

[2019-11-23 17:26:40.933] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00087.dat IN 98.733s 88 FILES DONE

[2019-11-23 17:28:17.914] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00088.dat IN 96.9807s 89 FILES DONE

[2019-11-23 17:29:57.812] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00089.dat IN 99.8985s 90 FILES DONE

[2019-11-23 17:31:36.852] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00090.dat IN 99.0392s 91 FILES DONE

[2019-11-23 17:33:12.134] [console] [info] DONE BLOCKS FILE /disk5/bitcoindata/blocks/blk00091.dat IN 95.2829s 92 FILES DONESpeeding Up the Insert

The above sample was taken on a Intel(R) Core(TM) i7-6700K CPU @ 4.00GHzIntel(R) Core(TM) i7-6700K CPU @ 4.00GHz machine, with 64 GB RAM, using LUKS encrypted 5400 RPM hard drives.

This is a heavy IO-bound process which would benefit greatly from SSD and non-encrypted drives. Encryption is unnecessary because blockchain data is publicly available.

To speed it up even further, you should run the MySQL (or other database) server on a separate machine with enough memory and SSD drives.

At the above rate of 100 seconds per block fine, it’ll take 2 days and 6 hours to complete the process for 2000 block files (at the time of this writing Bitcoin Core was at 18xx files). Using SSD non-encrypted drives with a well configured database server this can be easily reduced by 70% or more.

Links

Storing and Querying Bitcoin Blockchain Using SQL Databases [PDF]

To create a blockchain using MySQL database

Bitcointalk: SQL schema for the blockchain ?

sqlChain is a compact SQL layer that runs on top of bitcoind