If you come from the world of IT, then you’ve probably heard of database or disk data sharding.

For those who don’t come from IT, a real simple way to put it is that sharding means to divide the data and the work into chunks (shards).

When you split the work, different agents (people, computers or even different processes within a single computer) take care of one or more of those pieces simultaneously.

The overall purpose of sharding is to increase the number of things that are achieved per unit of time (throughput).

In RAID systems for disks, for example, sharding allows a file to be split into several chunks and each chunk can be read or written at the same time by multiple disks. This increases the disk throughput immensely.

Paying a High Price For Consensus

The main challenge for all forms of decentralized money is to reach global consensus.

How much money does address X have? How much money has X transferred to Y?

Unless there is consensus about these facts, cryptocurrencies would not form a viable financial system.

Cryptocurrency users need a way to guarantee to distrustful parties that the amount they see on each others’ balance is correct.

Everyone must also be convinced that transactions happened successfully – and mining provides this guarantee.

Cryptocurrency Sharding

So how does this idea get applied to cryptocurrencies?

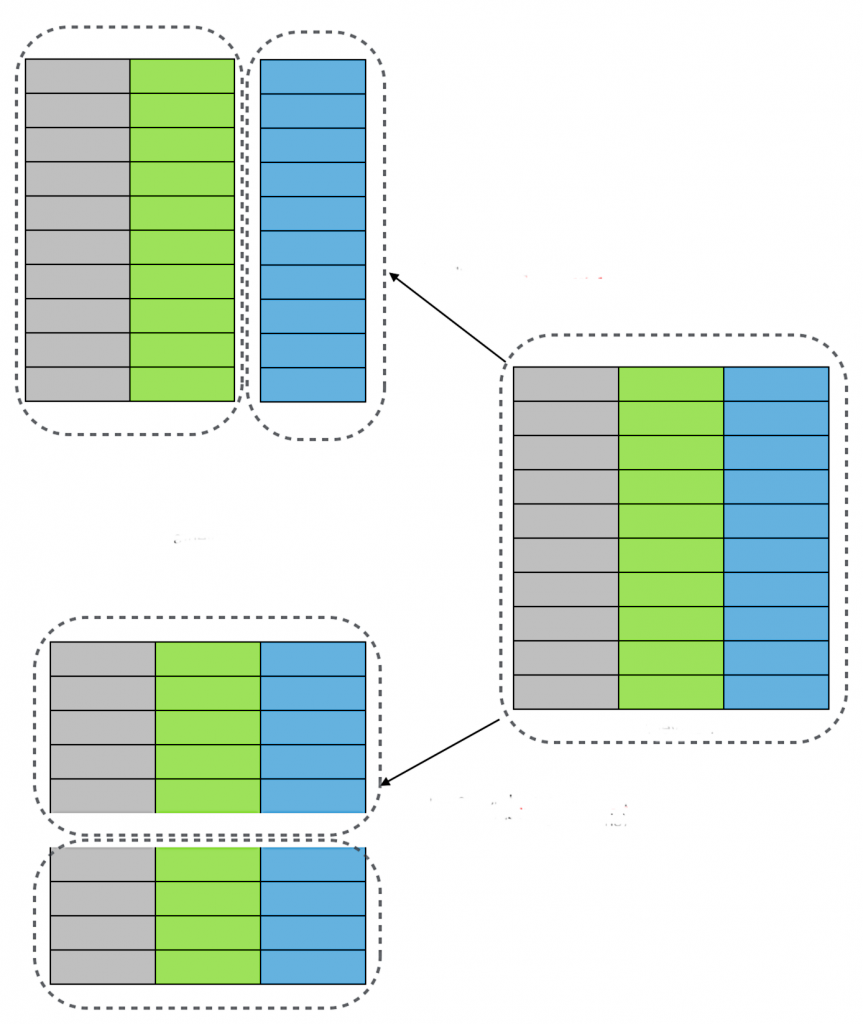

The general idea is to split the data being mined into smaller chunks that multiple miners can then process at the same time. Since the blockchain is one linear data structure where one block depends on the previous, the basic structure of the blockchain must be adapted for sharding to become possible.

As you probably know, crypto mining consumes a lot of energy.

Proof of Work cryptocurrencies like Ethereum and Bitcoin globally cost tens, even hundreds, of thousands of dollars per minute to maintain.

A lot of current research goes into finding innovative ways to better use the mining resources.

PoW Mining

Proof of Work (PoW) mining solves the consensus problem.

(It is arguably Satoshi’s greatest contribution to the development of decentralized electronic money.)

Each network participant performs a bit of work and the overall result is that one of the participants will find a solution to a block, thus guaranteeing the integrity of transactions.

If you split the work being done by miners into shards, then different groups of miners would process different chunks of transactions in order to split the load.

The result would be a lot more transactions being processed per second, effectively accomplishing what sharding set out to do.

Houston, we’ve had a problem

But there are enormous challenges in the way of implementing sharding.

Bitcoin showed its strength when it was consistently attacked and its own algorithm self-regulated.

This was made possible because the cost to attack the entire Bitcoin network makes it unfeasible to sustain an attack very long and still be profitable.

What would happen, though, if the Bitcoin network was split into many shards and each smaller group of miners had been processing a chunk of the transactions during this attack?

Those who hold enormous hashpower could then centralize all their efforts on attacking a smaller group and more easily sabotage Bitcoin.

Depending on the sharding strategy that gets adopted, this could halt the entire network.

For example, if one part of the network depended on the results provided by smaller groups in order to continue functioning, and those smaller groups got attacked, the larger network could be effectively DDoS’ed by attacking a much smaller (a weaker) group of miners.

Reassembly

Another issue is how to assemble the shards back into a single general consensus.

Since each node is now processing a smaller chunk of a larger data structure, it does not possess the entire structure within its memory.

Nodes must then communicate with each other to exchange this missing data. The entire network must therefore “talk” a lot more in order to guarantee that everyone reaches the same consensus.

What researchers have found is that this communication can grow a lot and defeat the purpose of sharding in the first place.

It’d be like exchanging one obstacle for another.

CAP Theorem

While the purpose of this article is to keep things really simple, we must mention this one theorem just to point you in the right direction in case you want to dig further.

CAP is an acronym for Consistency, Availability and Partition tolerance.

Here’s what each piece means:

Consistency: everyone on the network is receiving valid data.

Availability: everyone on the network receives a reply no matter when they issue a query.

Partition tolerance: the network continues working even if many shards are lost or corrupted.

The CAP theorem states that you can only have two of these at the same time for any storage system.

Decentralized networks are inherently heterogeneous.

Your home connection to the Internet is many times slower than large trunks of fiber optics where supercomputers communicate.

Poor countries have slower access while rich communities enjoy excellent connectivity.

Networks go down for various reasons, and so on.

Therefore guaranteeing that everyone has access only to the latest correct data (Consistency) or that they’ll receive a response to every query (Availability) is very difficult for any cryptocurrency network.

And, as we mentioned earlier, if someone with enough resources decided to attack a smaller chunk of the network, would Partition tolerance hold? Or would a sharded version of Bitcoin collapse?

Conclusion

There’s still much discussion about sharding. It is not entirely clear that sharding would increase overall network throughput and maintain the same reliability that Bitcoin has displayed in the past decade.

The trade-offs (CAP) are being carefully weighed by researchers because every attempt at increasing blockchain throughput has resulted in another variable increasing the cost of reaching consensus.

Storage and network activity are two parameters that increase very much whenever sharding is attempted.

This increase often offsets the advantages enough to discourage the change from a single blockchain to a sharded model.

We hope this basic introduction has given you a better idea about sharding and the challenges involved in implementing it.

The main ideas to keep in mind is that there is a fundamental theorem of data storage that says you cannot have Consistency, Availability and Partition tolerance all the same time. And this is a limiting factor for blockchain throughput because every time you split the workload, another component seems to become more complex and offsets the advantages of distributing the chores.

—